Using AWS SQS with Node Js application

Posted on November 10, 2021 at 08:11 AM

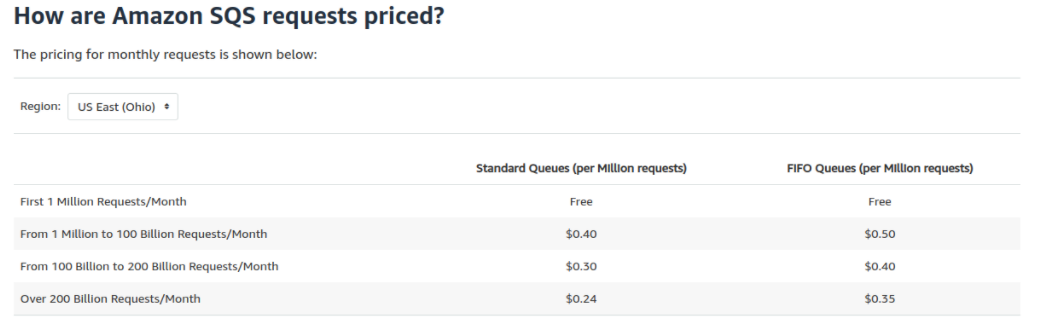

SQS pricing

Amazon SQS is a pay-per-use web service.- Pay only for what you use

- No minimum fee

More About SQS

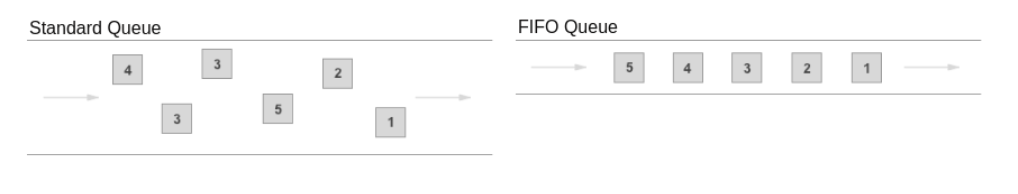

SQS offers two types of message queues.- Standard queue

- FIFO queue

Standard Queue

- Nearly Unlimited Throughput: It supports a nearly unlimited number of transactions per second (TPS) per API action.

- At-Least-Once Delivery: A message is delivered at least once, but occasionally more than one copy of a message is delivered.

- Best-Effort Ordering: Occasionally, messages might be delivered in an order different from which they were sent. Visit the official website to know more.

FIFO Queue

- High Throughput:It supports 300 messages per second (300 send, receive, or delete operations per second) and could be increased up to 30000 messages per second with batching.

- Exactly-Once Processing:A message is delivered once and remains available until the consumer processes and deletes it. Duplicates aren’t introduced into the queue.

- First-In-First-Out Delivery:The order in which messages are sent and received is strictly preserved (i.e. First-In-First-Out).

What queue type should I use?

You should use standard queues as long as your application can process messages that arrive out of order and once. For example, you want to resize images after upload. On the other hand, you should use FIFO queues if your application can not tolerate duplicates and out-of-order delivery. For example, you want to prevent customers from being debited twice after an order is placed.The example application in Node Js

What we will do:- Create a standard SQS Queue on AWS.

- Create a Producer(producer.js) to send messages to the queue.

- Create a Consumer(consumer.js) to consume messages from the queue.

Let’s begin.

Create Standard SQS Queue

Queue Prerequisites- Create an Amazon Web Service account

- Create an IAM user

- Get your access key ID and secret access key.

- Login to your AWS Account

- On AWS Management Console search and select Simple Queue Service

- Click “Create queue” under the heading Get started

- Type in “TestQueue” as the queue name

- Click on “Create Queue” at the bottom of the page. We will be using default settings.

- Copy the URL. It will be used later.

Create Node Js application

Application Prerequisites- Knowledge of ES6 standards

- Code editor (I’ll be using VSCode)

- Get the following from AWS:

- AWS access key id

- AWS secret access key

- AWS region

- Run following command in terminal:

- mkdir queueServices

- cd queueServices

- npm i aws-sdk sqs-consumer

- Open the folder in VSCode

- Create these files:

- sqs.js

- producer.js

- Consumer.js

- Code snippets:

sqs.js

const AWS = require('aws-sdk');

AWS.config.update({

region: ,

accessKeyId: ,

secretAccessKey:

});

const sqs = new AWS.SQS({ apiVersion: ‘2012-11-05’ });

module.exports = sqs;

- The queue is polled continuously for messages using long polling.

- Messages are deleted from the queue once the handler function has been completed successfully.

- if using handleMessageBatch, messages processed successfully should be deleted manually and throw an error to send the rest of the messages back to the queue.

- Throwing an error (or returning a rejected promise) from the handler function will cause the message to be left in the queue. An SQS redrive policy can be used to move messages that cannot be processed to a dead letter queue.

- By default messages are processed one at a time – a new message won’t be received until the first one has been processed. To process messages in parallel, use the batchSize option detailed below.

- By default, the default Node.js HTTP/HTTPS SQS agent creates a new TCP connection for every new request (AWS SQS documentation). To avoid the cost of establishing a new connection, you can reuse an existing connection by passing a new SQS instance with keepAlive: true.

Related Posts

Start a Project

We could talk tech all day. But we’d like to do things too,

like everything we’ve been promising out here.